Difference between revisions of "Smart 3D Cameras"

| (90 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

''' | =Smart 3D Cameras for Obstacle Detection, Avoidance and Identification= | ||

=Technology Roadmap Sections and Deliverables= | |||

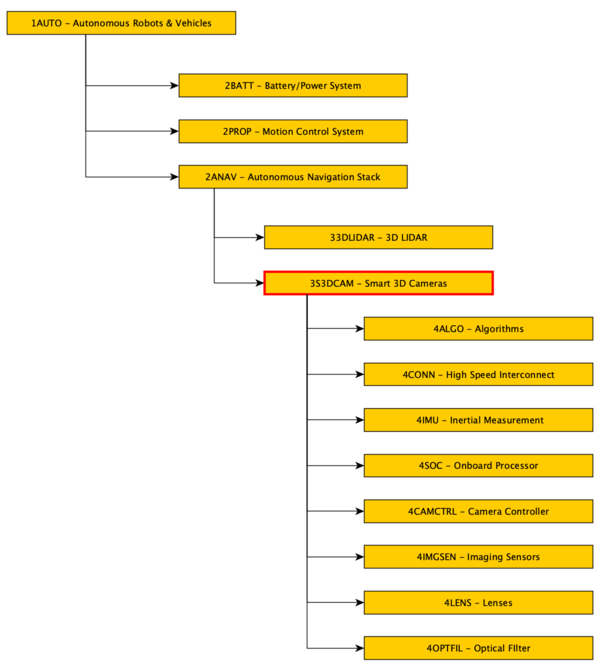

The Smart 3D Camera roadmap is a level 3 roadmap as it enables the level 2 roadmaps for obstacle detection, avoidance and autonomous navigation of robots, drones and cars. | |||

* '''3S3DCAM - Smart 3D Camera''' | |||

[[File:Screen Shot 2019-12-05 at 12.02.21 PM.png|600px]] | |||

= | ==Roadmap Overview== | ||

The robotics and autonomous cars industry is currently lacking an accurate, fast, cheap and reliable sensor which can be used both for obstacle detection, pose estimation and as a safety function. The power budget for a stereo-vision pipeline that can detect, map and avoid its obstacles can be up to 80% of the robot's power budget (in the case of industrial mobile robots). This roadmap explores the feasibility of augmenting stereo cameras to create a safety-certifiable 3D sensor. | |||

[[File:Robot camera stereo.png|600px]] | |||

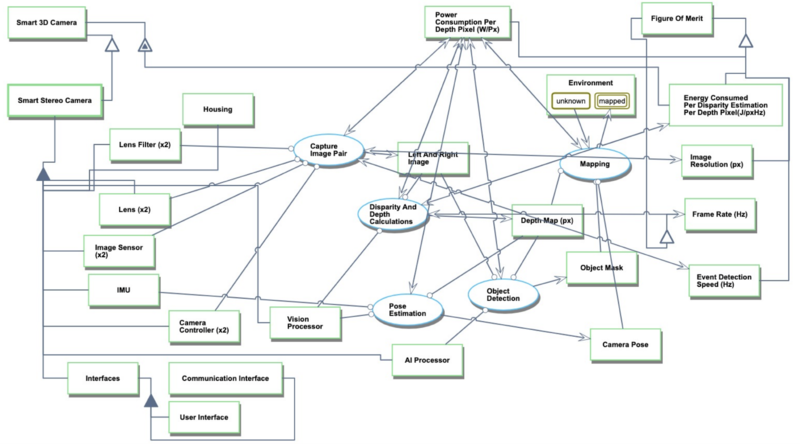

Smart 3D Cameras use a pair of identical optical imaging sensors and IR projectors (in certain use-cases) to capture stereo images of the environment. These images are then processed to calculate the disparity and then extract depth information for all pixels. In addition to the depth map, the scene is segmented to extract objects of interest and to identify them using training neural nets. This roadmap will focus on passive stereo vision cameras that do not use structured light due to their benefits for long range detection. | |||

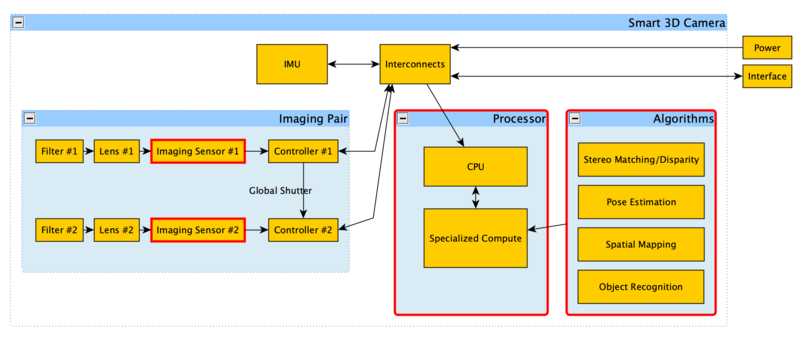

There are three major areas of interest for augmenting a 3D camera - the sensor, the compute and the algorithms. These are highlighted in red on the system decomposition below. | |||

[[File:Screen Shot 2019-12-05 at 12.02.32 PM.png|800px]] | |||

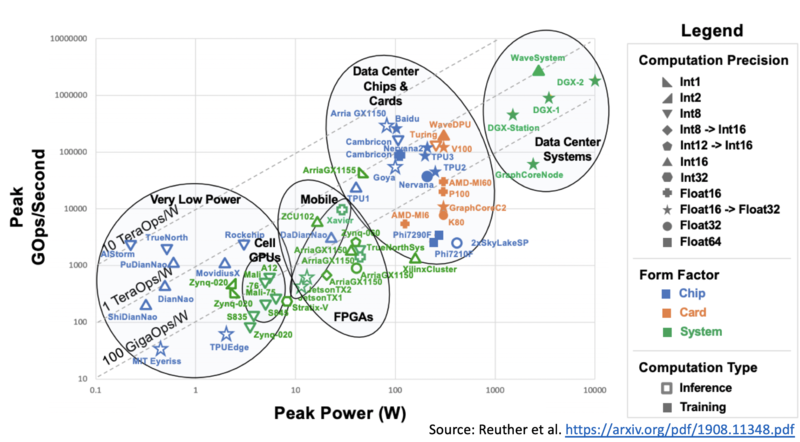

The current state for processing stereo images involves a mix of on-board processing for computing the depth map and segmenting obstacles. Object identification is then farmed out to a cloud service since those algorithms tend be very computationally intensive. There has been significant progress made in the reduction of power consumption for processors, thus enabling a new world of computing intensive applications in embedded platforms. The Smart 3D Camera roadmap will explore onboard processing of all algorithms given the rapid rate of progress in the processing efficiency (Giga Operations per Second per Watt - GOPS/W FOM) for processors. | |||

[[File: | [[File:Screen Shot 2019-12-05 .png|800px]] | ||

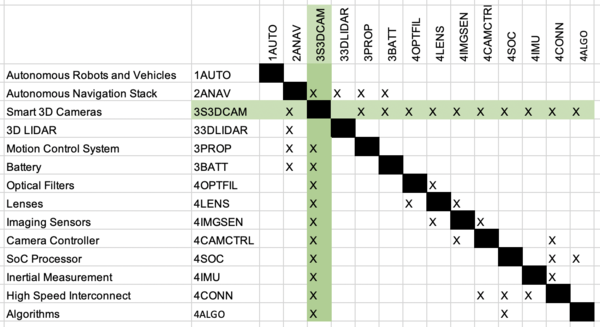

==Design Structure Matrix (DSM) Allocation== | ==Design Structure Matrix (DSM) Allocation== | ||

[[File: | [[File:Screen Shot 2019-12-05 at 12.05.50 PM.png|600px]] | ||

The | The 3S3DCAM roadmap is part of the larger company effort to develop an autonomous navigation stack as it enables 2ANAV. | ||

==Roadmap Model using OPM== | ==Roadmap Model using OPM== | ||

[[File:3S3DCAM OPM Model.png|800px]] | |||

==Figures of Merit== | |||

{| class="wikitable" | |||

|- | |||

! Figure of Merit !! Units !! Description | |||

|- | |||

| '''Power Consumed per Depth Pixel, P_dpx''' || '''W/px''' || The total power consumed by the sensing and processing pipeline divided by the number of depth pixels | |||

|- | |||

| '''Energy Consumed per Disparity Estimation per Depth Pixel, E_dpx''' || '''J/pxHz''' || The total energy consumed by the sensing and processing pipeline divided by the Million Disparity Estimations per Second | |||

|- | |||

| Million Disparity Estimations Per Second (MDE/s) (10^6) || pxHz || Comparison metric defined as: MDE/s = Image resolution * disparities * frame rate | |||

|- | |||

| '''Reliability''' || - ||Number of failures as a percentage of usage | |||

|- | |||

| '''Power Consumption''' || Watts (W)||Power consumed by the entire stereo camera and image processing pipeline to produce a depth map | |||

|- | |||

| Image resolution || Pixel (px) ||Number of pixels in the captured image | |||

|- | |||

| Range (m) || m || The maximum sensing distance | |||

|- | |||

| Accuracy (m) || m || The measuring confidence in the depth data point | |||

|- | |||

| Frame rate (fps or Hz) || fps or Hz || The scanning frame rate of the entire system | |||

|- | |||

| Depth Pixels (px) || px || The number of data points in the generated depth map | |||

|- | |||

| '''Cost ($)''' || $ || The commercial price for a customer, at volume | |||

|- | |||

|} | |||

The fundamental principle for stereo vision is computing distance from image disparity. This can be defined by the following equation where z is the depth, b is the baseline, F is the focal length and d is the disparity. All variables are in meters. | |||

'''z = bF/d''' | |||

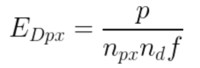

The Energy Consumed per Disparity Estimation Per Depth Pixel, E_dpx which is the total energy cost for acquiring and processing the image divided by the product of the image resolution (n_px), number of disparities (n_d) and frame rate (f). | |||

The | |||

[[File: | [[File:EnergyEquation.png|200px]] | ||

The Power Consumed per Depth Pixel is total power consumption of the processing and object detection/identification pipeline divided by the number of depth pixels. | |||

'''P_dpx = P/dpx = W/px''' | |||

==Alignment with Company Strategic Drivers== | ==Alignment with Company Strategic Drivers== | ||

The | {| class="wikitable" | ||

|- | |||

! # !! Strategic Driver !! Alignment and Targets | |||

|- | |||

| 1 || To develop a compact, high performance and low-power smart 3D camera that can detect objects in both indoors and outdoor environments || The 2S3DCAM roadmap will target the development of a passive stereo camera with onboard computing that has a sensing range of >20m, sensing speed of >30fps at an energy cost lower than 1uW/px in a 15cm x 5cm x 5cm footprint. <span style="background:#00FF00"> '''ALIGNED'''</span> | |||

|- | |||

| 2 || To enable autonomous classification and identification of relevant objects in the scene || The 2S3DCAM roadmap will enable the capability for AI neural nets to run onboard the camera to perform image classification and recognition actions. <span style="background:#00FF00"> '''ALIGNED BUT LOWER PRIORITY'''</span> | |||

|} | |||

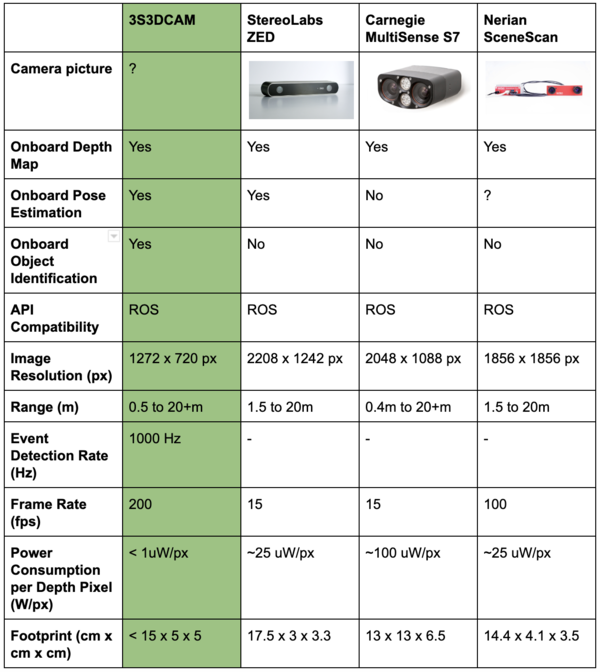

[[File: | ==Positioning of Company vs. Competition== | ||

[[File:Competition Comparison 3d Camera.png|600px]] | |||

By attaining those specifications, our Smart 3D Camera gets much closer to the utopia point. | |||

[[File:Screen Shot 2019-12-05 at 12.12.39 PM.png|800px]] | |||

==Technical Model== | |||

The most important FOM is the Energy Consumed per Depth Pixel, E_dpx which is the total energy cost for acquiring and processing the image divided by the product of the image resolution (n_px), number of disparities (n_d) and frame rate (f). | |||

[[File:EnergyEquation.png|200px]] | |||

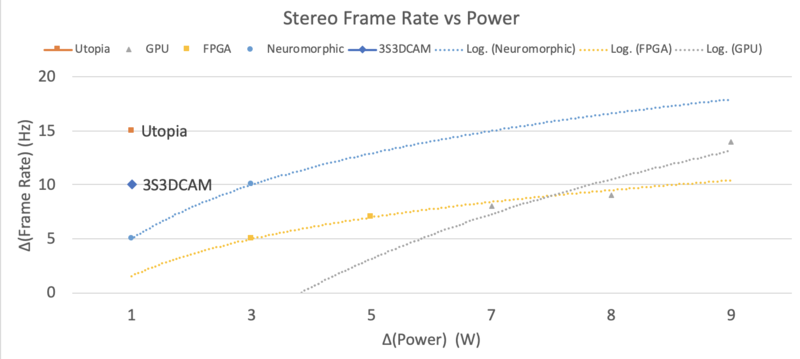

Since the image resolution and number of disparities are constants for a comparison, the relationship can be described as: | |||

[[File: | [[File:EnergyDiffe.png|200px]] | ||

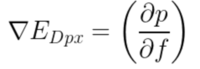

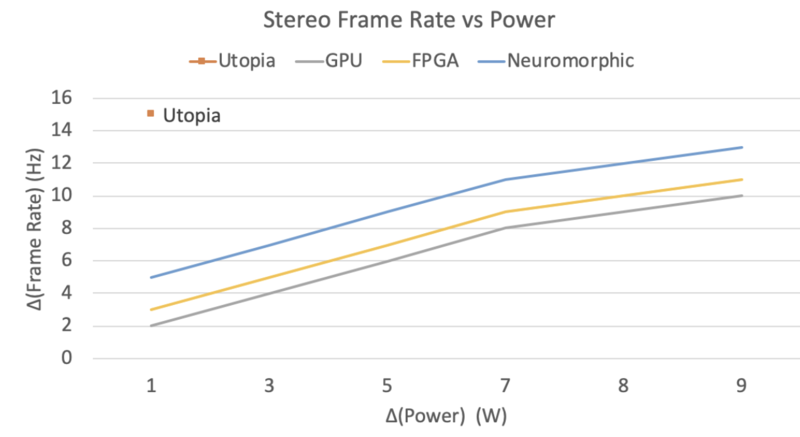

The parameters that affect frame rate is the image resolution, number of disparities and processor/image sensor technology. The curves below were generated empirically based on the data from Andrepoulos et al. that was analyzed for this assignment. The paper is in the publications section. | |||

[[File: | [[File:SpeedPower.png|800px]] | ||

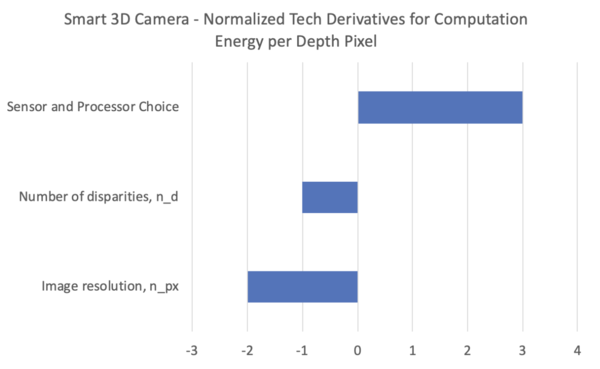

The normalized model with three controllable parameters is shown in the tornado chart below. The imaging sensor and processor choice has a significantly larger impact on power consumption, followed by the image resolution and then by the number of disparities. | |||

[[File:Tornado.png|600px]] | |||

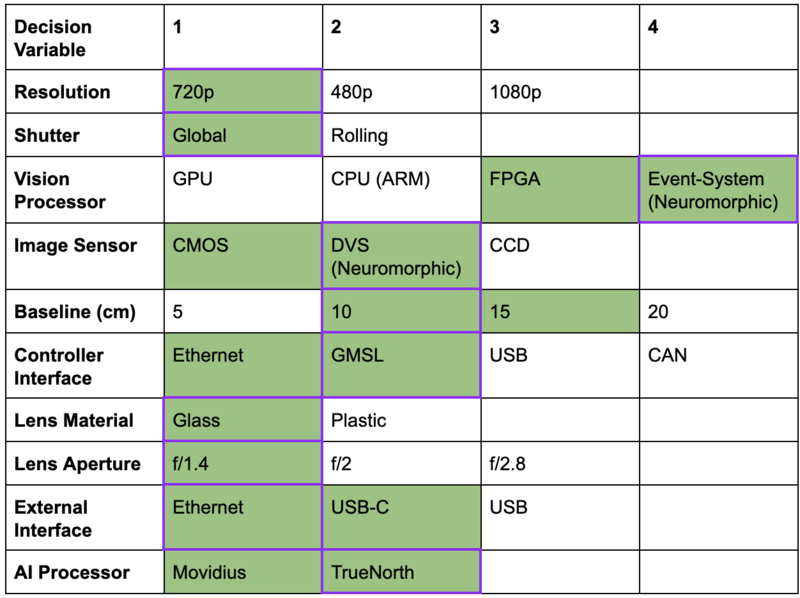

The | This informs the variable selection in the morphological matrix below. The cells highlighted in green are favorable choices and the final choice is boxed in purple. The favorable choices were determined by comparing the current performance available from the technology choice and how it compares against the targets defined for 3S3DCAM. The final choices in purple were chosen because they provide a competitive edge. For example, moving to the neuromorphic event system for the vision processor instead of FPGA enables the company to be on the leading S-curve for low-latency vision processing. Similarly using the neuromorphic imaging sensor and TrueNorth AI processor (neuromorphic) enables a step reduction in power consumption. The remaining parameters such as resolution, shutter, baseline, lens FOV were chosen to optimize for the camera footprint and performance. | ||

[[File: | [[File:MorphMatrix.png|800px]] | ||

==Financial Model== | ==Financial Model== | ||

[[File: | As the company investing in the development of Smart 3D cameras, two types of financial models matter: 1) the ROI model for a customer which helps inform the BOM cost target and pricing limits 2) the NPV model for prioritizing R&D projects. This analysis will be limited to the #2 as the customer CONOPS is currently not well understood. | ||

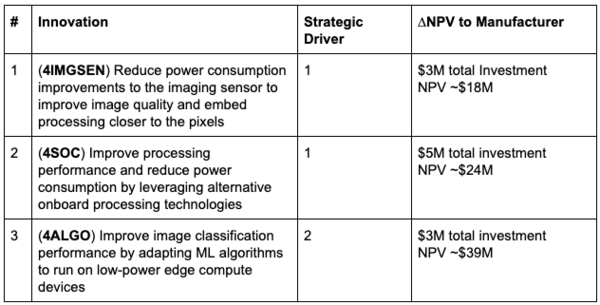

To prioritize the R&D projects, it is important to work from the strategic drivers. To achieve both the strategic drivers, innovations are needed for high performance and low power in the imaging sensor, the compute architecture and the classification algorithms. | |||

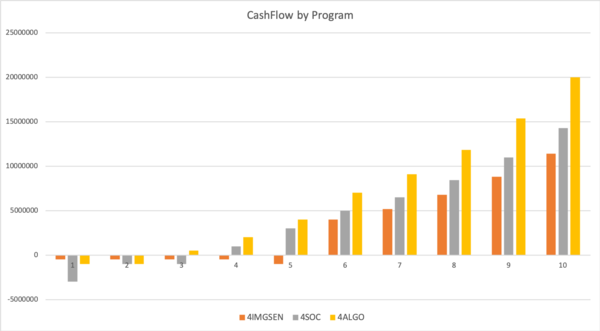

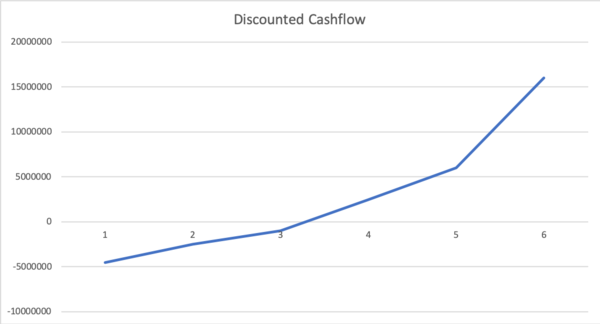

The company has $11 million to spend on R&D programs. The NPV for each of the programs is calculated assuming a discount rate of 7% and a product life of ~10 years. Since the exact monetary benefit of each of these innovations is hard to characterize, I’ve guesstimated the impact to sales and cash flow. | |||

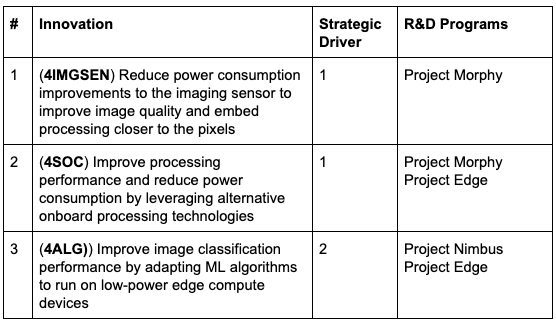

Based on the outcome of the financial analysis, the following innovation programs will be funded: | |||

[[File:R d table.png|600px]] | |||

[[File:Camera Cashflow.png|600px]] | |||

[[File:Dcf camera 1.png|600px]] | |||

==List of R&T Projects and Prototypes== | ==List of R&T Projects and Prototypes== | ||

Based on the strategic drivers, the following programs will be funded: | |||

[[File:Screen Shot 2019-12-05 at 11.50.34 AM.png|600px]] | |||

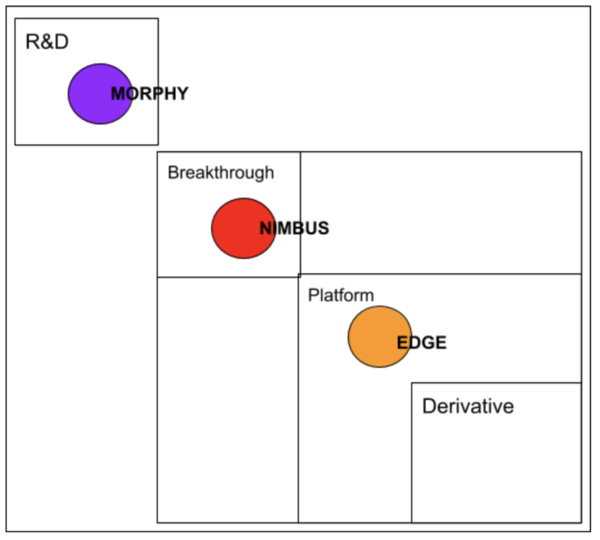

1.'''Project Morphy (TRL5 -> TRL7)''' | |||

Morphy is an ambitious R&D program to accelerate the technology maturity of neuromorphic sensors and computers for use in production grade cameras. This project will license the two patents listed below and validate concepts from paper #2. | |||

2. '''Project Edge (TRL6 -> TRL9)''' | |||

Edge will develop methods to leverage FPGAs and ASICs to perform pixel computation closer to the sensor in lieu of power hungry GPUs. This project will reproduce and improve upon the results from paper #1 | |||

3. '''Project Nimbus (TRL6 -> TRL9)''' | |||

Nimbus is an ambitious project to simplify ML algorithms and models so that they can be performant on embedded devices. This project will build upon ideas from paper #3. | |||

These three projects can be classified as follows: | |||

[[File:R d Portfolio.png|600px]] | |||

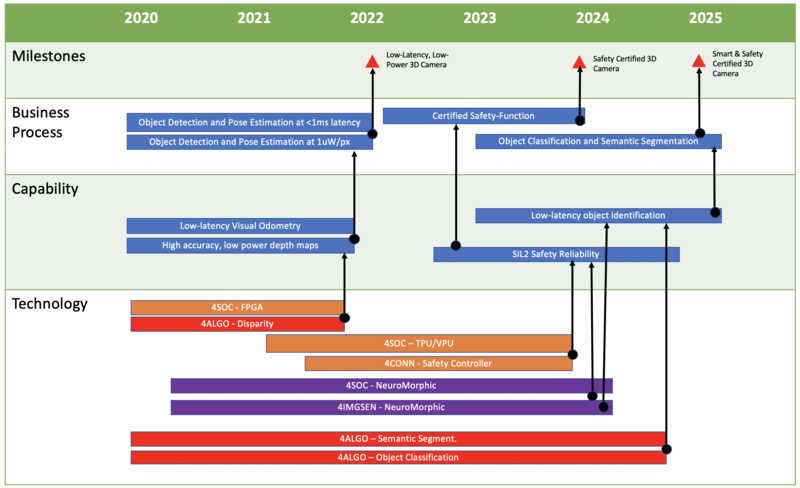

The development and deployment timeline is as follows: | |||

[[File: | [[File:Screen Shot 2019-12-05 at 11.58.17 AM.png|800px]] | ||

==Key Publications, Presentations and Patents== | ==Key Publications, Presentations and Patents== | ||

[[File: | ===Patents=== | ||

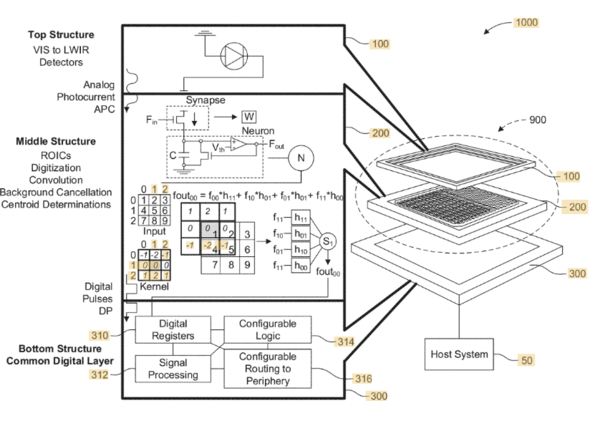

'''Dawson et al. Neuromorphic Digital Focal Plane Array. US Pat Pending. US20180278868A1''' | |||

This patent claims new techniques for creating imaging sensors that leverage the principle of neuromorphism to embed pixel processing directly onto the sensor. For a Smart 3D camera this presents a disruptive option for two FOMs - reduce power consumption and increased frame rate. | |||

[[File:Dawson et al.png|600px]] | |||

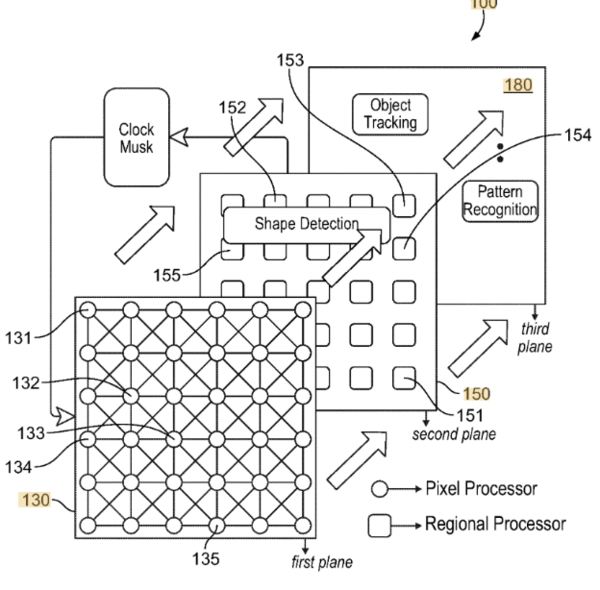

'''Bobda et al. Reconfigurable 3D Pixel-Parallel Neuromorphic Architecture for Smart Image Sensor. Pending. US20190325250A1''' | |||

This patent also leverages neuromorphism but instead of only tracking pixel changes, it also embeds processing elements into different regions of the image sensor. With this technology, it will be feasible to embed intelligent processing of shapes and features close to the image capture system. By leveraging image sensor embedded processing, a magnitude improvement in power efficiency and performance can be achieved. | |||

[[File:Bobda et al.png|600px]] | |||

===Publications=== | |||

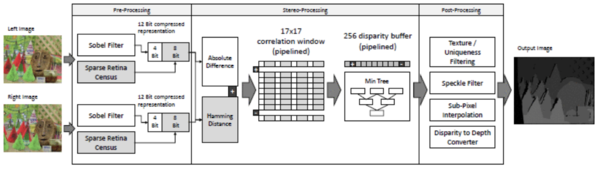

'''Michalik et al. Real time smart stereo camera based on FPGA-SoC. 2017. IEEE-RAS''' | |||

This work presents a realtime smart stereo camera system implementation resembling the full stereo processing pipeline in a single FPGA device. The paper introduces a novel memory optimized stereo processing algorithm ”Sparse Retina Census Correlation” (SRCC) that embodies a combination of two well established window based stereo matching approaches. The presented smart camera solution has demonstrated real-time stereo processing of 1280×720 pixel depth images with 256 disparities on a Zynq XC7Z030 FPGA device at 60fps. This approach is ~3x faster than the nearest competitor. | |||

[[File:Michalik et al .png|600px]] | |||

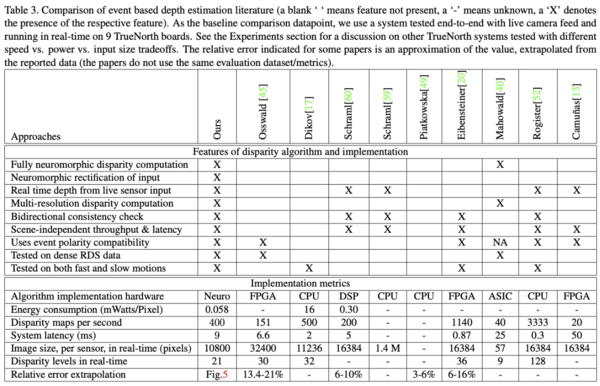

'''Andrepoulos et al. A Low Power, High Throughput, Fully Event-Based Stereo System. 2018 IEEE CVF''' | |||

This paper uses neuromorphic event-based hardware to implement stereo vision. This is the first time that an end-to-end stereo pipeline from image acquisition and rectification, multi-scale spatiotemporal stereo correspondence, winner-take-all, to disparity regularization is implemented fully on event-based hardware. Using a cluster of TrueNorth neurosynaptic processors, the authors demonstrates their ability to process bilateral event-based inputs streamed live by Dynamic Vision Sensors (DVS), at up to 2,000 disparity maps per second, producing high fidelity disparities which are in turn used to reconstruct, at low power, the depth of events produced from rapidly changing scenes. They consume ~200x lesser power at 0.058mW/pixel! | |||

[[File:Andrepoulos et al.png|600px]] | |||

'''Shin et al. An 1.92mW Feature Reuse Engine based on inter-frame similarity for low-power object recognition in video frames. 2014 IEEE''' | |||

This paper proposes a Feature Reuse Engine (FReE) to achieve low-power object recognition in video frames. Unlike previous works, proposed FReE reuses 58% of features from previous frame with inter-frame similarity. Power consumption of object recognition processor is reduced by 31% with the proposed FReE which consumes only 1.92mW in a 130nm CMOS technology. This has potential for reducing power consumption for smart stereo cameras. | |||

==Technology Strategy Statement== | ==Technology Strategy Statement== | ||

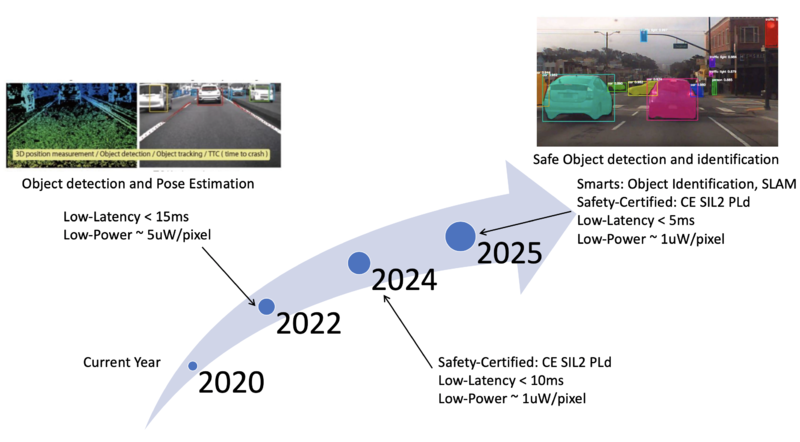

[[File:Swoosh camera.png|800px]] | |||

''' | Our goal is to be the industry leader in safety-rated 3D sensors for autonomous navigation,especially for industrial robots and drones. '''We intend to get there by 2025 by developing safety-certified smart 3D cameras that cost less than $500. These 3D cameras will leverage cutting-edge image sensors, processors and algorithms that can sense and classify the world at greater than 720p resolution at under 5ms latency at a low power consumption of 1uW/pixel.''' | ||

To achieve these goals, we will invest $11MM in three programs (Morphy, Edge and Nimbus) that has a NPV of $81M over 10 years. | |||

Latest revision as of 13:14, 10 December 2019

Smart 3D Cameras for Obstacle Detection, Avoidance and Identification

Technology Roadmap Sections and Deliverables

The Smart 3D Camera roadmap is a level 3 roadmap as it enables the level 2 roadmaps for obstacle detection, avoidance and autonomous navigation of robots, drones and cars.

- 3S3DCAM - Smart 3D Camera

Roadmap Overview

The robotics and autonomous cars industry is currently lacking an accurate, fast, cheap and reliable sensor which can be used both for obstacle detection, pose estimation and as a safety function. The power budget for a stereo-vision pipeline that can detect, map and avoid its obstacles can be up to 80% of the robot's power budget (in the case of industrial mobile robots). This roadmap explores the feasibility of augmenting stereo cameras to create a safety-certifiable 3D sensor.

Smart 3D Cameras use a pair of identical optical imaging sensors and IR projectors (in certain use-cases) to capture stereo images of the environment. These images are then processed to calculate the disparity and then extract depth information for all pixels. In addition to the depth map, the scene is segmented to extract objects of interest and to identify them using training neural nets. This roadmap will focus on passive stereo vision cameras that do not use structured light due to their benefits for long range detection.

There are three major areas of interest for augmenting a 3D camera - the sensor, the compute and the algorithms. These are highlighted in red on the system decomposition below.

The current state for processing stereo images involves a mix of on-board processing for computing the depth map and segmenting obstacles. Object identification is then farmed out to a cloud service since those algorithms tend be very computationally intensive. There has been significant progress made in the reduction of power consumption for processors, thus enabling a new world of computing intensive applications in embedded platforms. The Smart 3D Camera roadmap will explore onboard processing of all algorithms given the rapid rate of progress in the processing efficiency (Giga Operations per Second per Watt - GOPS/W FOM) for processors.

Design Structure Matrix (DSM) Allocation

The 3S3DCAM roadmap is part of the larger company effort to develop an autonomous navigation stack as it enables 2ANAV.

Roadmap Model using OPM

Figures of Merit

| Figure of Merit | Units | Description |

|---|---|---|

| Power Consumed per Depth Pixel, P_dpx | W/px | The total power consumed by the sensing and processing pipeline divided by the number of depth pixels |

| Energy Consumed per Disparity Estimation per Depth Pixel, E_dpx | J/pxHz | The total energy consumed by the sensing and processing pipeline divided by the Million Disparity Estimations per Second |

| Million Disparity Estimations Per Second (MDE/s) (10^6) | pxHz | Comparison metric defined as: MDE/s = Image resolution * disparities * frame rate |

| Reliability | - | Number of failures as a percentage of usage |

| Power Consumption | Watts (W) | Power consumed by the entire stereo camera and image processing pipeline to produce a depth map |

| Image resolution | Pixel (px) | Number of pixels in the captured image |

| Range (m) | m | The maximum sensing distance |

| Accuracy (m) | m | The measuring confidence in the depth data point |

| Frame rate (fps or Hz) | fps or Hz | The scanning frame rate of the entire system |

| Depth Pixels (px) | px | The number of data points in the generated depth map |

| Cost ($) | $ | The commercial price for a customer, at volume |

The fundamental principle for stereo vision is computing distance from image disparity. This can be defined by the following equation where z is the depth, b is the baseline, F is the focal length and d is the disparity. All variables are in meters.

z = bF/d

The Energy Consumed per Disparity Estimation Per Depth Pixel, E_dpx which is the total energy cost for acquiring and processing the image divided by the product of the image resolution (n_px), number of disparities (n_d) and frame rate (f).

The Power Consumed per Depth Pixel is total power consumption of the processing and object detection/identification pipeline divided by the number of depth pixels.

P_dpx = P/dpx = W/px

Alignment with Company Strategic Drivers

| # | Strategic Driver | Alignment and Targets |

|---|---|---|

| 1 | To develop a compact, high performance and low-power smart 3D camera that can detect objects in both indoors and outdoor environments | The 2S3DCAM roadmap will target the development of a passive stereo camera with onboard computing that has a sensing range of >20m, sensing speed of >30fps at an energy cost lower than 1uW/px in a 15cm x 5cm x 5cm footprint. ALIGNED |

| 2 | To enable autonomous classification and identification of relevant objects in the scene | The 2S3DCAM roadmap will enable the capability for AI neural nets to run onboard the camera to perform image classification and recognition actions. ALIGNED BUT LOWER PRIORITY |

Positioning of Company vs. Competition

By attaining those specifications, our Smart 3D Camera gets much closer to the utopia point.

Technical Model

The most important FOM is the Energy Consumed per Depth Pixel, E_dpx which is the total energy cost for acquiring and processing the image divided by the product of the image resolution (n_px), number of disparities (n_d) and frame rate (f).

Since the image resolution and number of disparities are constants for a comparison, the relationship can be described as:

The parameters that affect frame rate is the image resolution, number of disparities and processor/image sensor technology. The curves below were generated empirically based on the data from Andrepoulos et al. that was analyzed for this assignment. The paper is in the publications section.

The normalized model with three controllable parameters is shown in the tornado chart below. The imaging sensor and processor choice has a significantly larger impact on power consumption, followed by the image resolution and then by the number of disparities.

This informs the variable selection in the morphological matrix below. The cells highlighted in green are favorable choices and the final choice is boxed in purple. The favorable choices were determined by comparing the current performance available from the technology choice and how it compares against the targets defined for 3S3DCAM. The final choices in purple were chosen because they provide a competitive edge. For example, moving to the neuromorphic event system for the vision processor instead of FPGA enables the company to be on the leading S-curve for low-latency vision processing. Similarly using the neuromorphic imaging sensor and TrueNorth AI processor (neuromorphic) enables a step reduction in power consumption. The remaining parameters such as resolution, shutter, baseline, lens FOV were chosen to optimize for the camera footprint and performance.

Financial Model

As the company investing in the development of Smart 3D cameras, two types of financial models matter: 1) the ROI model for a customer which helps inform the BOM cost target and pricing limits 2) the NPV model for prioritizing R&D projects. This analysis will be limited to the #2 as the customer CONOPS is currently not well understood. To prioritize the R&D projects, it is important to work from the strategic drivers. To achieve both the strategic drivers, innovations are needed for high performance and low power in the imaging sensor, the compute architecture and the classification algorithms.

The company has $11 million to spend on R&D programs. The NPV for each of the programs is calculated assuming a discount rate of 7% and a product life of ~10 years. Since the exact monetary benefit of each of these innovations is hard to characterize, I’ve guesstimated the impact to sales and cash flow. Based on the outcome of the financial analysis, the following innovation programs will be funded:

List of R&T Projects and Prototypes

Based on the strategic drivers, the following programs will be funded:

1.Project Morphy (TRL5 -> TRL7)

Morphy is an ambitious R&D program to accelerate the technology maturity of neuromorphic sensors and computers for use in production grade cameras. This project will license the two patents listed below and validate concepts from paper #2.

2. Project Edge (TRL6 -> TRL9) Edge will develop methods to leverage FPGAs and ASICs to perform pixel computation closer to the sensor in lieu of power hungry GPUs. This project will reproduce and improve upon the results from paper #1

3. Project Nimbus (TRL6 -> TRL9) Nimbus is an ambitious project to simplify ML algorithms and models so that they can be performant on embedded devices. This project will build upon ideas from paper #3.

These three projects can be classified as follows:

The development and deployment timeline is as follows:

Key Publications, Presentations and Patents

Patents

Dawson et al. Neuromorphic Digital Focal Plane Array. US Pat Pending. US20180278868A1 This patent claims new techniques for creating imaging sensors that leverage the principle of neuromorphism to embed pixel processing directly onto the sensor. For a Smart 3D camera this presents a disruptive option for two FOMs - reduce power consumption and increased frame rate.

Bobda et al. Reconfigurable 3D Pixel-Parallel Neuromorphic Architecture for Smart Image Sensor. Pending. US20190325250A1

This patent also leverages neuromorphism but instead of only tracking pixel changes, it also embeds processing elements into different regions of the image sensor. With this technology, it will be feasible to embed intelligent processing of shapes and features close to the image capture system. By leveraging image sensor embedded processing, a magnitude improvement in power efficiency and performance can be achieved.

Publications

Michalik et al. Real time smart stereo camera based on FPGA-SoC. 2017. IEEE-RAS This work presents a realtime smart stereo camera system implementation resembling the full stereo processing pipeline in a single FPGA device. The paper introduces a novel memory optimized stereo processing algorithm ”Sparse Retina Census Correlation” (SRCC) that embodies a combination of two well established window based stereo matching approaches. The presented smart camera solution has demonstrated real-time stereo processing of 1280×720 pixel depth images with 256 disparities on a Zynq XC7Z030 FPGA device at 60fps. This approach is ~3x faster than the nearest competitor.

Andrepoulos et al. A Low Power, High Throughput, Fully Event-Based Stereo System. 2018 IEEE CVF This paper uses neuromorphic event-based hardware to implement stereo vision. This is the first time that an end-to-end stereo pipeline from image acquisition and rectification, multi-scale spatiotemporal stereo correspondence, winner-take-all, to disparity regularization is implemented fully on event-based hardware. Using a cluster of TrueNorth neurosynaptic processors, the authors demonstrates their ability to process bilateral event-based inputs streamed live by Dynamic Vision Sensors (DVS), at up to 2,000 disparity maps per second, producing high fidelity disparities which are in turn used to reconstruct, at low power, the depth of events produced from rapidly changing scenes. They consume ~200x lesser power at 0.058mW/pixel!

Shin et al. An 1.92mW Feature Reuse Engine based on inter-frame similarity for low-power object recognition in video frames. 2014 IEEE This paper proposes a Feature Reuse Engine (FReE) to achieve low-power object recognition in video frames. Unlike previous works, proposed FReE reuses 58% of features from previous frame with inter-frame similarity. Power consumption of object recognition processor is reduced by 31% with the proposed FReE which consumes only 1.92mW in a 130nm CMOS technology. This has potential for reducing power consumption for smart stereo cameras.

Technology Strategy Statement

Our goal is to be the industry leader in safety-rated 3D sensors for autonomous navigation,especially for industrial robots and drones. We intend to get there by 2025 by developing safety-certified smart 3D cameras that cost less than $500. These 3D cameras will leverage cutting-edge image sensors, processors and algorithms that can sense and classify the world at greater than 720p resolution at under 5ms latency at a low power consumption of 1uW/pixel. To achieve these goals, we will invest $11MM in three programs (Morphy, Edge and Nimbus) that has a NPV of $81M over 10 years.