Difference between revisions of "Augmented, Virtual, and Mixed Reality"

| (24 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==Roadmap Overview== | ==Roadmap Overview== | ||

Augmented, virtual, and mixed realities reside on a continuum and blur the line between the actual world and the artificial world - both of which are perceived through human senses. | Augmented, virtual, and mixed realities reside on a continuum and blur the line between the actual world and the artificial world - both of which are currently perceived through human senses. | ||

Augmented reality devices enable digital elements to be added to a live representation of the real world. This could be as simple as adding virtual images onto the camera screen of a smartphone (as recently popularized by mobile applications Pokemon Go, Snapchat, et al). Virtual reality, which lies at the other end of the spectrum, seeks to create a completely virtual | Augmented reality devices enable digital elements to be added to a live representation of the real world. This could be as simple as adding virtual images onto the camera screen of a smartphone (as recently popularized by mobile applications Pokemon Go, Snapchat, et al). Virtual reality, which lies at the other end of the spectrum, seeks to create a completely virtual and immersive environment for the user. Whereas augmented reality incorporates digital elements onto a live model of the real world, virtual reality seeks to exclude the real world altogether and transport the user to a new realm through complete telepresence. | ||

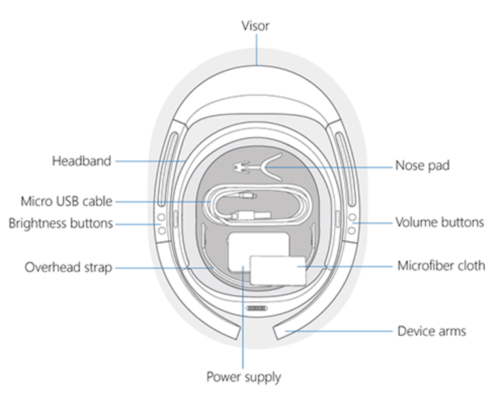

Mixed reality, on the other hand, incorporates both of these ideas to create a hybrid experience where the user can interact with both the real and virtual world. Mixed reality devices can take many forms. For the purposes of this technology roadmap we've elected to narrow our focus to wearable headgear (heads up) devices. These devices are typically fashioned with visual displays and tracking technology that allow six degrees of freedom (forward/backward, up/down, left/right, pitch, yaw, roll) and immersive experiences. The elements of form for an example mixed reality product, the Microsoft HoloLens (1st generation), are depicted in the figures below. | Mixed reality, on the other hand, incorporates both of these ideas to create a hybrid experience where the user can interact with both the real and virtual world. Mixed reality devices can take many forms. For the purposes of this technology roadmap we've elected to narrow our focus to wearable headgear (heads up) devices. These devices are typically fashioned with visual displays and tracking technology that allow six degrees of freedom (forward/backward, up/down, left/right, pitch, yaw, roll) and immersive experiences. The elements of form for an example mixed reality product, the Microsoft HoloLens (1st generation), are depicted in the figures below. | ||

[[File:HoloLens_Overview_Pic1.png]] | [[File:HoloLens_Overview_Pic1.png|500px]] [[File:HoloLens.png|500px]] | ||

'''Figure 1''' - Mixed Reality Example (Microsoft Hololens) | '''Figure 1''' - Mixed Reality Example (Microsoft Hololens) | ||

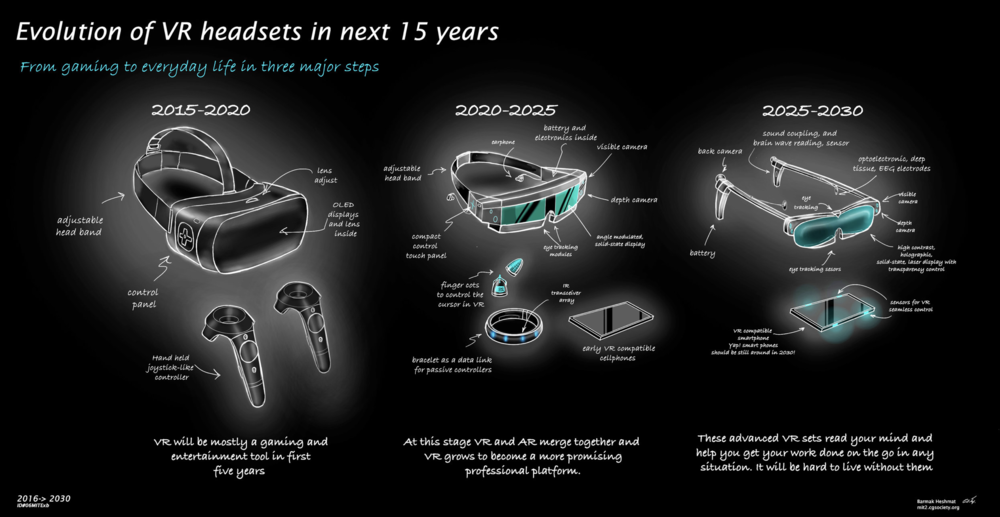

In terms of functional taxonomy, this technology is primarily intended to exchange information. The design of these devices today allow for information to be exchanged through two of the five human perceptual systems: (1) visual system & (2) auditory system. The combination of hardware, software, and informational/environmental inputs allow mixed reality users to interact and anchor virtual objects to the real world environment. One vision for the future is depicted below (courtesy of Barmak Heshmat, MIT Media Lab). | |||

[[File:VR.png|1000px]] | |||

'''Figure 2''' - Sample Mixed Reality Roadmap | |||

==Design Structure Matrix (DSM) Allocation== | ==Design Structure Matrix (DSM) Allocation== | ||

| Line 21: | Line 21: | ||

==Roadmap Model using Object-Process-Methodology (OPM)== | ==Roadmap Model using Object-Process-Methodology (OPM)== | ||

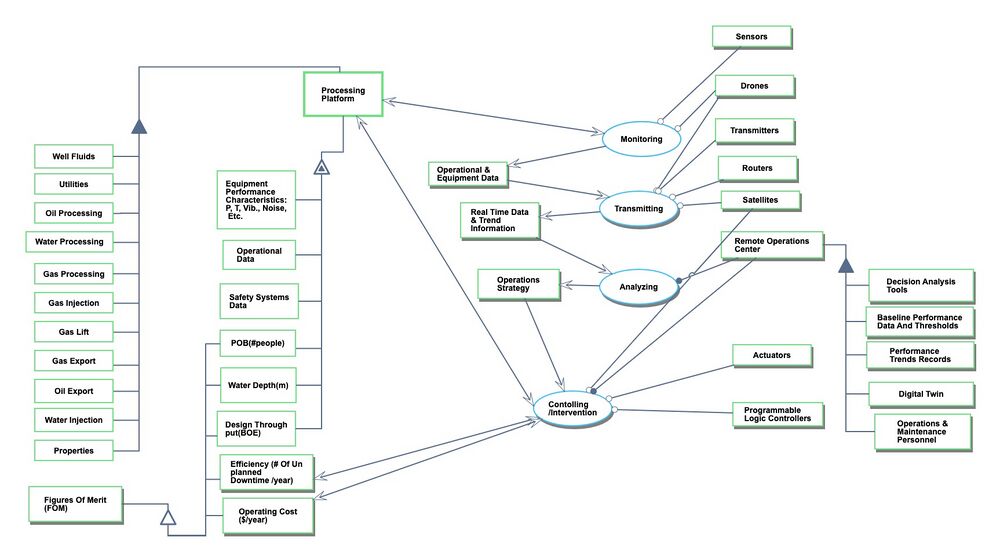

We provide an Object-Process-Diagram (OPD) of the augmented, virtual, and mixed reality roadmap in Figure 3 below. This diagram captures the main object of the roadmap, mixed reality device, its various instances including main competitors, its decomposition into subsystems (hardware, battery, operating system, etc), its characterization by Figures of Merit (FOMs) as well as the main processes (Exchanging, Recharging, and Displaying). | |||

[[File:OPD.jpeg|1000px]] | |||

'''Figure 4''' - Mixed Reality OPD | |||

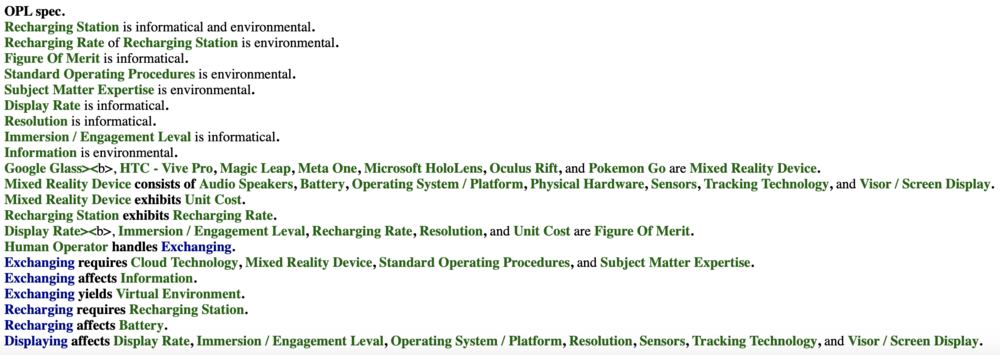

'''Figure | An Object-Process-Language (OPL) description of the roadmap scope is auto-generated and given below. It reflects the same content as the previous figure, but in a formal natural language. | ||

[[File:MROPL.png|1000px]] | |||

'''Figure 5''' - Mixed Reality OPL | |||

==Figures of Merit (FOM) Definition== | ==Figures of Merit (FOM) Definition== | ||

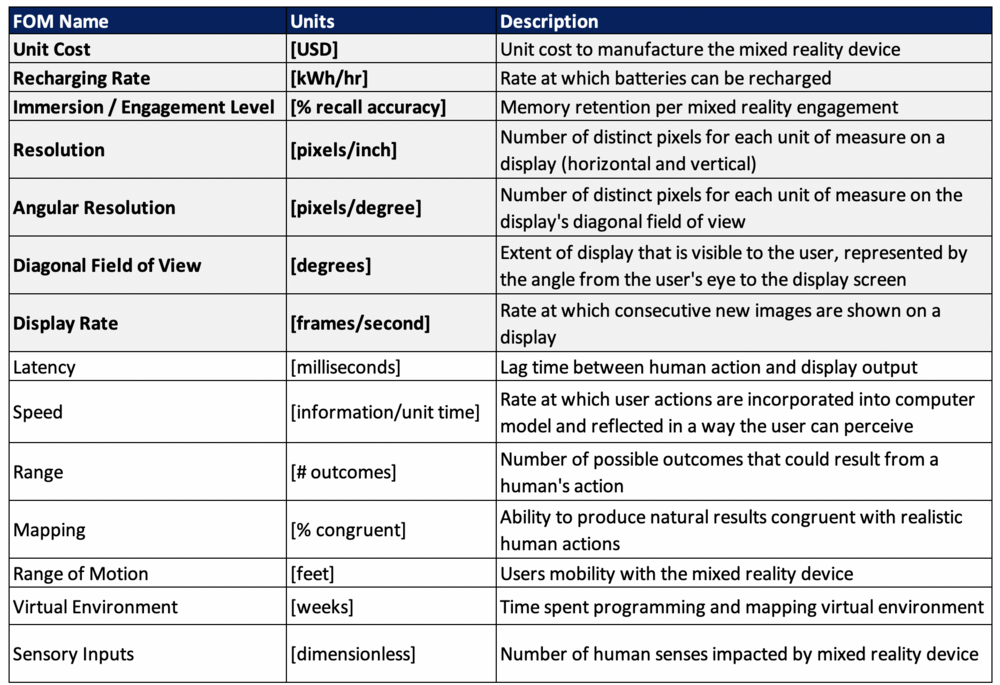

The table below shows a list of FOMs by which mixed reality devices can be assessed. The first four (shown in bold) were included on the OPD; the others will follow as this technology roadmap is developed. Several of these are similar to the FOMs that are used to compare traditional motion pictures, modern gaming consoles, and other technologies that aim to exchange information. | |||

It's worth noting that several of the FOMs are requisites to the mixed reality Immersion / Engagement Level, one of the primary FOMs. In this way, these can be thought of as a FOM chain. Latency and Mapping, for example, contribute greatly to the overall memory retention per use of the mixed reality device. This is true for many of the FOMs listed below. | |||

[[File:FOMTable.png|1000px]] | |||

'''Figure 6''' - Mixed Reality Figures of Merit | |||

Besides defining what the FOMs are, this section of the roadmap also contains the FOM trends over time dFOM/dt as well as some of the key governing equations that underpin the technology. These governing equations can be derived from physics (or chemistry, biology, etc) or they can be empirically derived from a multivariate regression model. The specific governing equations for mixed reality technology will be updated as the roadmap progresses. | |||

===Display Rate Trajectory=== | |||

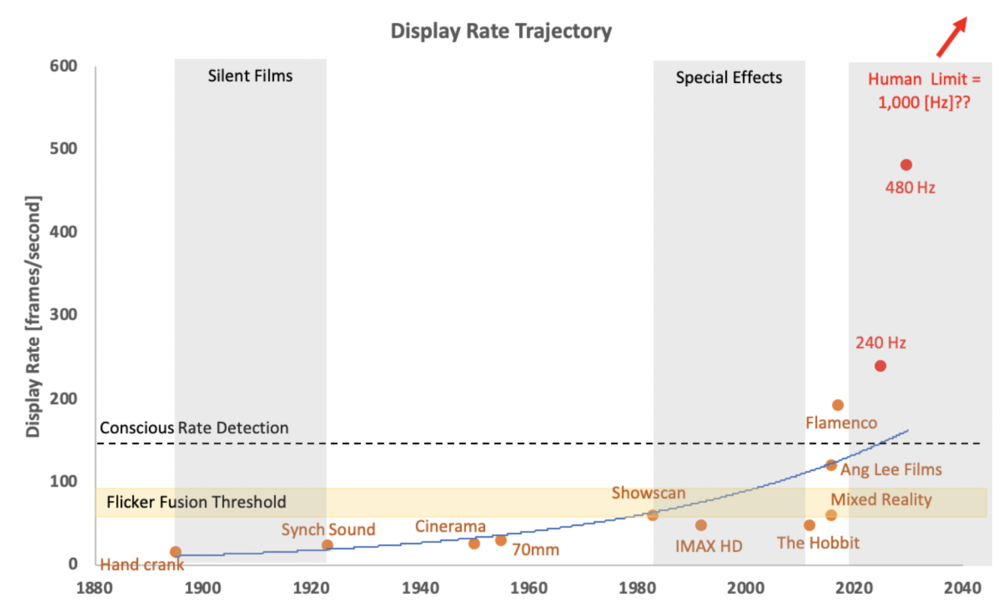

The human visual system can typically perceive images individually at a rate up to 10-12 images per second; anything beyond that is perceived as motion to the human eye. This is the starting point for humans to perceive motion pictures, and it began in earnest in 1891 with the invention of the kinetoscope, the predecessor to the modern picture projector. Although the primary comparison for this FOM resides in the film industry, it is not the only use. The gaming industry, theme parks, and now mixed reality technology all have a stake in optimizing this FOM for performance. | |||

To create a convincing virtual experience, experts claim that 20-30 frames per second is required. That said, there is a long way to go for display rates to truly make the digital world indistinguishable from the real world. There will come a point when the display frame rate for mixed reality devices reaches the temporal and visual limit for human beings. In this case, humans will be unable to distinguish between the virtual environment and the real world. Some editorials refer to this as the “Holodeck Turing Test.” | |||

The rate of improvement for this Figure of Merit is shown in the chart below. Given the trajectory, the technology appears to be in the “Takeoff” stage of maturity (as it relates to the S-Curve). This is further supported by the gulf between the current Figure of Merit value (~120-192 frames/second) and the theoretical limit as we understand it today (~1,000 frames per second). It is reasonable to expect “Rapid Progress” in the near future because the interdependent technologies that have historically limited growth (projection equipment, resolution technology, 3D motion pictures, etc.) are undergoing their own technology takeoff. | |||

[[File:FOM2.png|1000px]] | |||

'''Figure 7''' - Display Rate Trajectory | |||

==Alignment with Company Strategic Drivers== | ==Alignment with Company Strategic Drivers== | ||

==Positioning: Company versus Competition FOM Charts== | ==Positioning: Company versus Competition FOM Charts== | ||

Latest revision as of 04:51, 6 October 2019

Roadmap Overview

Augmented, virtual, and mixed realities reside on a continuum and blur the line between the actual world and the artificial world - both of which are currently perceived through human senses.

Augmented reality devices enable digital elements to be added to a live representation of the real world. This could be as simple as adding virtual images onto the camera screen of a smartphone (as recently popularized by mobile applications Pokemon Go, Snapchat, et al). Virtual reality, which lies at the other end of the spectrum, seeks to create a completely virtual and immersive environment for the user. Whereas augmented reality incorporates digital elements onto a live model of the real world, virtual reality seeks to exclude the real world altogether and transport the user to a new realm through complete telepresence.

Mixed reality, on the other hand, incorporates both of these ideas to create a hybrid experience where the user can interact with both the real and virtual world. Mixed reality devices can take many forms. For the purposes of this technology roadmap we've elected to narrow our focus to wearable headgear (heads up) devices. These devices are typically fashioned with visual displays and tracking technology that allow six degrees of freedom (forward/backward, up/down, left/right, pitch, yaw, roll) and immersive experiences. The elements of form for an example mixed reality product, the Microsoft HoloLens (1st generation), are depicted in the figures below.

Figure 1 - Mixed Reality Example (Microsoft Hololens)

In terms of functional taxonomy, this technology is primarily intended to exchange information. The design of these devices today allow for information to be exchanged through two of the five human perceptual systems: (1) visual system & (2) auditory system. The combination of hardware, software, and informational/environmental inputs allow mixed reality users to interact and anchor virtual objects to the real world environment. One vision for the future is depicted below (courtesy of Barmak Heshmat, MIT Media Lab).

Figure 2 - Sample Mixed Reality Roadmap

Design Structure Matrix (DSM) Allocation

Roadmap Model using Object-Process-Methodology (OPM)

We provide an Object-Process-Diagram (OPD) of the augmented, virtual, and mixed reality roadmap in Figure 3 below. This diagram captures the main object of the roadmap, mixed reality device, its various instances including main competitors, its decomposition into subsystems (hardware, battery, operating system, etc), its characterization by Figures of Merit (FOMs) as well as the main processes (Exchanging, Recharging, and Displaying).

Figure 4 - Mixed Reality OPD

An Object-Process-Language (OPL) description of the roadmap scope is auto-generated and given below. It reflects the same content as the previous figure, but in a formal natural language.

Figure 5 - Mixed Reality OPL

Figures of Merit (FOM) Definition

The table below shows a list of FOMs by which mixed reality devices can be assessed. The first four (shown in bold) were included on the OPD; the others will follow as this technology roadmap is developed. Several of these are similar to the FOMs that are used to compare traditional motion pictures, modern gaming consoles, and other technologies that aim to exchange information.

It's worth noting that several of the FOMs are requisites to the mixed reality Immersion / Engagement Level, one of the primary FOMs. In this way, these can be thought of as a FOM chain. Latency and Mapping, for example, contribute greatly to the overall memory retention per use of the mixed reality device. This is true for many of the FOMs listed below.

Figure 6 - Mixed Reality Figures of Merit

Besides defining what the FOMs are, this section of the roadmap also contains the FOM trends over time dFOM/dt as well as some of the key governing equations that underpin the technology. These governing equations can be derived from physics (or chemistry, biology, etc) or they can be empirically derived from a multivariate regression model. The specific governing equations for mixed reality technology will be updated as the roadmap progresses.

Display Rate Trajectory

The human visual system can typically perceive images individually at a rate up to 10-12 images per second; anything beyond that is perceived as motion to the human eye. This is the starting point for humans to perceive motion pictures, and it began in earnest in 1891 with the invention of the kinetoscope, the predecessor to the modern picture projector. Although the primary comparison for this FOM resides in the film industry, it is not the only use. The gaming industry, theme parks, and now mixed reality technology all have a stake in optimizing this FOM for performance.

To create a convincing virtual experience, experts claim that 20-30 frames per second is required. That said, there is a long way to go for display rates to truly make the digital world indistinguishable from the real world. There will come a point when the display frame rate for mixed reality devices reaches the temporal and visual limit for human beings. In this case, humans will be unable to distinguish between the virtual environment and the real world. Some editorials refer to this as the “Holodeck Turing Test.”

The rate of improvement for this Figure of Merit is shown in the chart below. Given the trajectory, the technology appears to be in the “Takeoff” stage of maturity (as it relates to the S-Curve). This is further supported by the gulf between the current Figure of Merit value (~120-192 frames/second) and the theoretical limit as we understand it today (~1,000 frames per second). It is reasonable to expect “Rapid Progress” in the near future because the interdependent technologies that have historically limited growth (projection equipment, resolution technology, 3D motion pictures, etc.) are undergoing their own technology takeoff.

Figure 7 - Display Rate Trajectory