Difference between revisions of "Continuous Security Monitoring"

(added headers) |

(added tradespace for key equations) |

||

| Line 53: | Line 53: | ||

=== Technical Model: Morphological Matrix & Tradespace === | === Technical Model: Morphological Matrix & Tradespace === | ||

Of the variables in MTTD, only Incident Detect Time (also called Detection Time) is within a tooling’s control. The Number of Incidents and Incident Start Time is more often determined by external factors. For a baseline design, say we have an Incident that starts on one of our endpoints. Our tool scans every 30 minutes (R). Each scan has 600 network events (N). The efficiency (E) of data processing is the ability of the tool to quickly analyze those total network events to only the relevant ones. Efficiency is rated on a scale of 1-10 where 1 is the least efficient such as a human manually reviewing the network events for processing and 10 is the most efficient using a supercomputer for data processing. Our baseline design has an efficiency coefficient of 6, which equates to a modern cloud processing engine but it is not fully optimized. Accuracy (A) is the measurement of how likely the identified relevant events are true security incidents vs false negatives or false positives. Our baseline design has an 80% accuracy. For the baseline design, what is the likely time passed from incident start until detection (IDetect)? 62.5 minutes per event. Changes in the parameters and their effect on Detection Time is below. | |||

[[File:Detect Time tornado chart.png]] | |||

While this can help us find theoretical optimizations within technical constraints limit, it does not consider business related impacts and decisions. For example, a high Efficiency of 10 would require a supercomputer and the average companies and CSM tools cannot afford that at this time. Likewise scanning every minute improves the Rate of scanning and thereby the Detection Time, but scanning every minute is not always feasible cost wise because scanning that much generates a lot of unnecessary data, slows performance overall of the system, and increases the cost since CSM tools sometimes cost by scan or network activity. Because of these business costs, customers must make judgment calls on their own risk to cost benefit of decreasing the Rate or increasing the Efficiency. | |||

The Detection Time can also only be on parts of the customer’s system that is monitored. Coverage is an FOM that measures the percentage of the network system that is monitored by a tool vs total activities within the system. | |||

If we extend the baseline design from the MTTD, the 600 events for N for Number of network activities becomes the Monitored Activities in the Coverage governing equation. We will assume a baseline design where Total Activities in the System is 700. This design has a coverage of 85.7%. | |||

[[File:Coverage Tornado Chart.png]] | |||

Similar to the Detection Time, the governing equations and optimizations for Coverage do not take into account the business impact of these inputs. For example, the least risky Coverage would be to have 100% of all activities within the system. This is not always feasible due to cost constraints or data processing, similar to the constraints on Number of Network Activities in Detection time. Companies may also define coverage differently whether they are accounting for coverage of open-source or third-party connections. Companies that use third-party tooling might assume that the tool company has complete coverage within their system, which is an assumption of risk. Customers need to make their own judgement call of cost to risk when determining the leverage of coverage required in their system. | |||

=== Key Publications and Patents === | === Key Publications and Patents === | ||

Revision as of 01:37, 6 November 2023

Continuous Security Monitoring

Roadmap Overview

A Continuous Security Monitoring (CSM) tool is critical to provide near-real-time surveillance and analysis of an environment to flag potential security threats. A CSM tool is an integral part of any modern cybersecurity framework. The technology affords automation as central to its operation, ensuring that the tool offers ongoing insights in the security posture of an environment and improves an organization's ability to manage potential risks.

The technology functions with behavior analytics to monitor environmental activities in order to pinpoint potentially malicious or anomalous actions. The future of the technology includes advanced predicted analysis using Machine Learning (ML) and Artificial Intelligence (AI) tools where the tool can shift from reactive to proactive intelligence. In the long-term, quantum computing preparedness will be key to ensuring the tool can manage threats in a post-quantum computing world. The tool may also possess the ability to automatically repair itself, thought it currently operates in tandem with human intervention for optimal performance.

Further, the increasing regulation of cyber space and introduction of cybersecurity policy suggest the CSM tool may also be a requirement for regulatory compliance and would allow environments to stay updated with evolving cybersecurity laws and regulations.

The visual below offers insight on where the CSM tool exists in the 5x5 matrix:

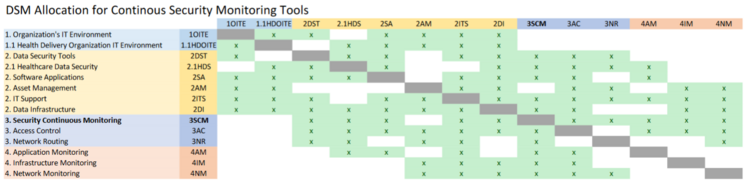

DSM Allocation

The most inter-dependencies are with the Healthcare Data Security (2HDS) roadmap. The Healthcare Data Security roadmap is narrowed in to a specific industry whereas we are focused on the more generalized industrial application but a deeper technical level of data security, Continuous Security Monitoring. The higher levels are essentially the same across roadmaps, but industry specific vs generalized. As such, we’ve marked the Healthcare specific roadmap items as subcategories underneath the non-industry specific version. We decided to omit the industry specific items, such as Medical Device Protection, from our DSM.

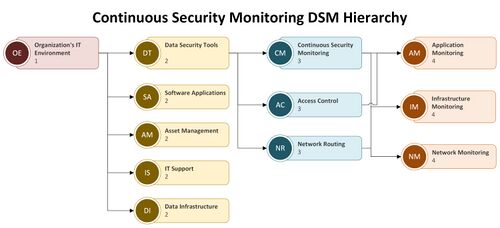

A more detailed view of the levels for the roadmap interactions is provided in hierarchy form.

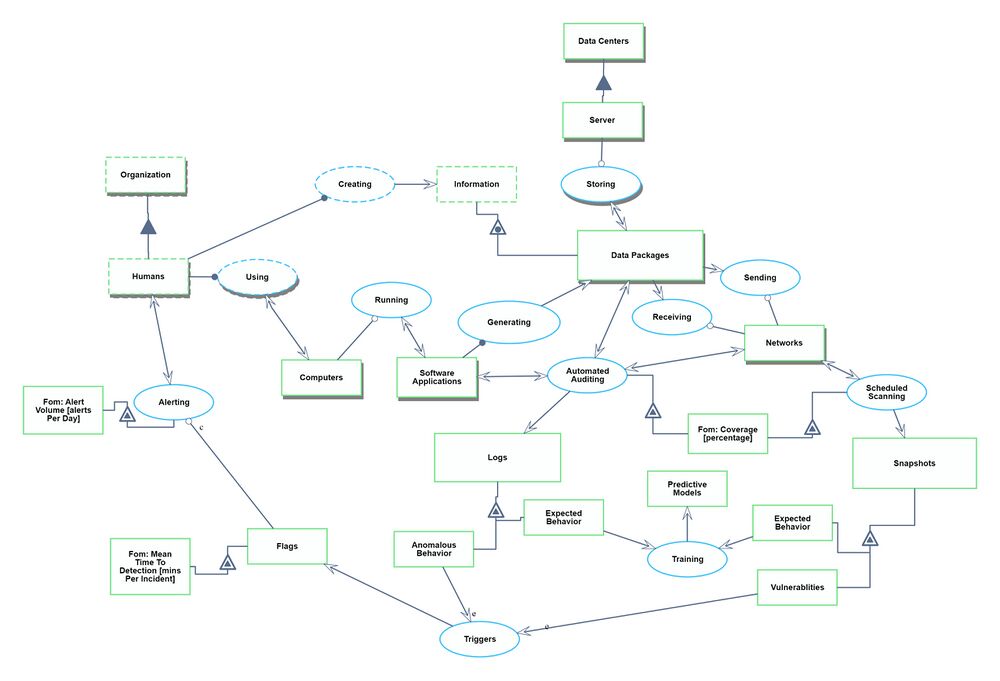

Roadmap Model using OPM (ISO 19450)

We defined a Continuous Security Monitoring (CSM) tool to sit within the environment of an organization's Information Technology (IT) infrastructure. The system contains the IT landscape at a high level, such as software applications, data infrastructure, and network routing. We narrowed to just the monitoring and alerting functionality of a CSM tool. There could be additional functionalities within data security such as root cause analysis, remediation, and pro-active repairs, but we have excluded those from our system.

Note: Automated Auditing refers to the automatic generation of logs and monitoring of networks and applications to ensure compliance with security policies and standards.

The Object-Process Model above can be written in natural language as follows:

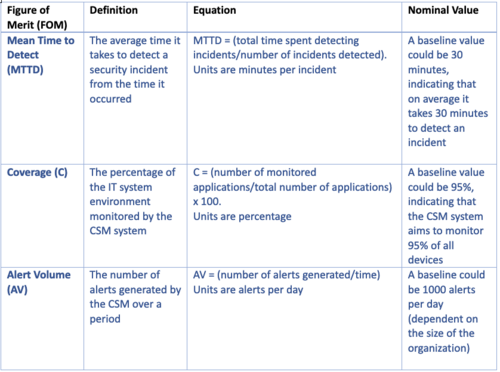

Figures of Merit (FOM): Definition (name, unit, trends dFOM/dt)

The table below delineates three critical Figures of Merit (FOM) for a CSM tool, in order of priority:

The FOM for Mean Time to Detect (MTTD) is plotted below as the evolution of the technology has afforded a decreased value for MTTD, approaching the theoretical limit of zero, or instantaneous detection. However, reaching an absolute zero MTTD may not be possible due to constraints such as processing time, network latency, and inherent limitation in detection algorithms. The sensitive nature of cybersecurity makes publicly available data a challenge, as well as data sharing. The novelty of the technology in the cybersecurity domain also contributes to the dearth of data and lack of historical data for the technology. Therefore, the resources listed in References informed the construction of the graph below.

Alignment with “company” Strategic Drivers: FOM targets

Positioning of Company vs Competition: FOM charts

Technical Model: Morphological Matrix & Tradespace

Of the variables in MTTD, only Incident Detect Time (also called Detection Time) is within a tooling’s control. The Number of Incidents and Incident Start Time is more often determined by external factors. For a baseline design, say we have an Incident that starts on one of our endpoints. Our tool scans every 30 minutes (R). Each scan has 600 network events (N). The efficiency (E) of data processing is the ability of the tool to quickly analyze those total network events to only the relevant ones. Efficiency is rated on a scale of 1-10 where 1 is the least efficient such as a human manually reviewing the network events for processing and 10 is the most efficient using a supercomputer for data processing. Our baseline design has an efficiency coefficient of 6, which equates to a modern cloud processing engine but it is not fully optimized. Accuracy (A) is the measurement of how likely the identified relevant events are true security incidents vs false negatives or false positives. Our baseline design has an 80% accuracy. For the baseline design, what is the likely time passed from incident start until detection (IDetect)? 62.5 minutes per event. Changes in the parameters and their effect on Detection Time is below.

While this can help us find theoretical optimizations within technical constraints limit, it does not consider business related impacts and decisions. For example, a high Efficiency of 10 would require a supercomputer and the average companies and CSM tools cannot afford that at this time. Likewise scanning every minute improves the Rate of scanning and thereby the Detection Time, but scanning every minute is not always feasible cost wise because scanning that much generates a lot of unnecessary data, slows performance overall of the system, and increases the cost since CSM tools sometimes cost by scan or network activity. Because of these business costs, customers must make judgment calls on their own risk to cost benefit of decreasing the Rate or increasing the Efficiency. The Detection Time can also only be on parts of the customer’s system that is monitored. Coverage is an FOM that measures the percentage of the network system that is monitored by a tool vs total activities within the system.

If we extend the baseline design from the MTTD, the 600 events for N for Number of network activities becomes the Monitored Activities in the Coverage governing equation. We will assume a baseline design where Total Activities in the System is 700. This design has a coverage of 85.7%.

Similar to the Detection Time, the governing equations and optimizations for Coverage do not take into account the business impact of these inputs. For example, the least risky Coverage would be to have 100% of all activities within the system. This is not always feasible due to cost constraints or data processing, similar to the constraints on Number of Network Activities in Detection time. Companies may also define coverage differently whether they are accounting for coverage of open-source or third-party connections. Companies that use third-party tooling might assume that the tool company has complete coverage within their system, which is an assumption of risk. Customers need to make their own judgement call of cost to risk when determining the leverage of coverage required in their system.

Key Publications and Patents

References

[1] Cybersecurity Ventures. 2020. History of hacking and defenses. Retrieved from https://cybersecurityventures.com/the-history-of-cybercrime-and-cybersecurity-1940-2020/

[2] Statista. 2023. Median time from compromise to discovery in days of larger organizations worldwide from 2014 to 2019. Retrieved from https://www.statista.com/statistics/221406/time-between-initial-compromise-and-discovery-of-larger-organizations/

[3] Gartner. 2023. List of Critical Capabilities for Cloud Access Security Brokers. Retrieved from https://www.gartner.com/document/4366499?ref=solrAll&refval=381285986&qid=

[4] Oberheide, J., & Cooke, E. 2008. CloudAV: N-Version Antivirus in the Network Cloud. Retrieved from https://www.semanticscholar.org/paper/CloudAV%3A-N-Version-Antivirus-in-the-Network-Cloud-Oberheide-Cooke/1ca0b25bd4fe4994f7f5e334987588776a1a123c

[5] Secureworks. 2017. The Evolution of Intrusion Detection & Prevention. Retrieved from https://www.secureworks.com/blog/the-evolution-of-intrusion-detection-prevention

[6] ISACA. 2021. The Evolution of Security Operations and Strategies for Building an Effective SOC. ISACA Journal, 5. Retrieved from https://www.isaca.org/resources/isaca-journal/issues/2021/volume-5/the-evolution-of-security-operations-and-strategies-for-building-an-effective-soc

[7] Pure Storage. 2023. What is SOAR? Retrieved from https://www.purestorage.com/knowledge/what-is-soar.html

[8] Brand Essence Research. 2023. Security Orchestration, Automation and Response Market. Retrieved from https://brandessenceresearch.com/security/security-orchestration-automation-and-response-market

[9] CrowdStrike. 2023. Falcon Insight XDR. Retrieved from https://www.crowdstrike.com/products/endpoint-security/falcon-insight-xdr/

[10] CrowdStrike. 2023. CrowdStrike Announces General Availability of Falcon XDR. Retrieved from https://www.crowdstrike.com/press-releases/crowdstrike-announces-general-availability-of-falcon-xdr/