Radar For Autonomous Vehicles Mobile Robots

Technology Roadmap Sections and Deliverables

- 3BOTRDR - Radar for Autonomous Vehicles and Mobile Robots

Roadmap Overview

This technology roadmap explores the application and use of radar sensors in autonomous vehicles (AV) and mobile robots. In a world where more of our everyday tasks are being automated and removing the need for human intervention, sensors (such as radars) are increasingly important to enable autonomous technological advancements. Mobile robots and autonomous vehicles alike need to sense objects and features in their surroundings to navigate and interact with the world; radar sensors can be used to accomplish this. This level 3 roadmap evaluates radar's interactions among level 2 functions such as power systems, control systems, and autonomous navigation, but will also introduce level 4 technologies within radar for further evaluation.

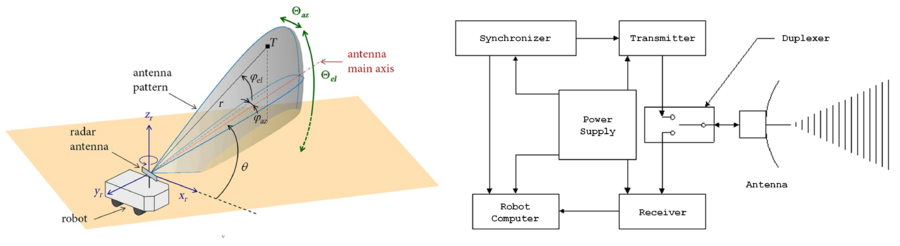

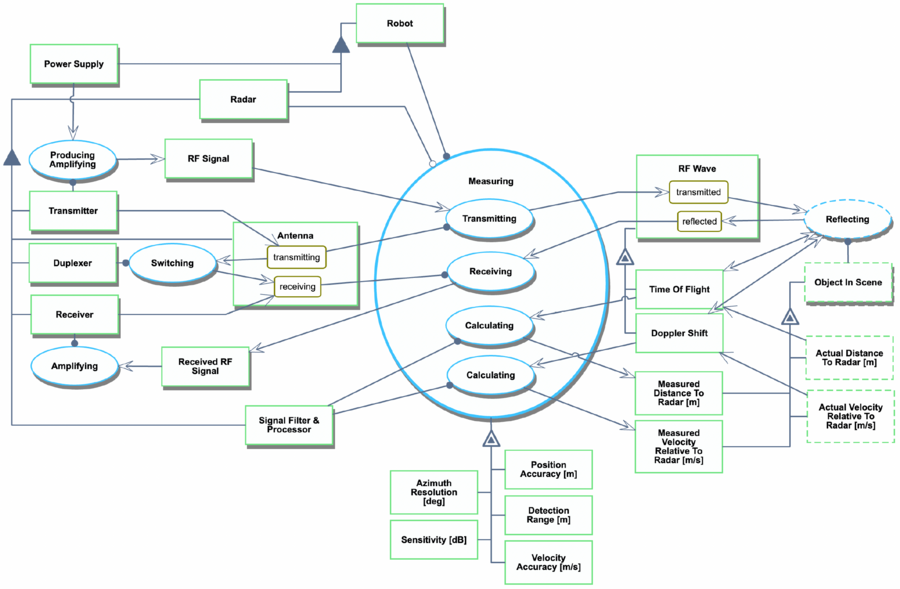

Radar within AVs and mobile robots works by emitting radio frequency waves into the environment. When the waves hit features in the scene, some energy is absorbed, some bounces away, and some bounces back toward the radar head. The radar head receives the reflected portions of the wave. By measuring the time-of-flight, doppler, and other attributes of the reflected wave, radars can estimate the distance to the object and its relative velocity. Some radar companies have developed unique algorithms to determine other attributes of features as well.

Radar is not the only option for exteroceptive sensing in robotics and autonomy; LiDARs and visible cameras are ubiquitous in the industry. Radar, however, has several advantages: long range, ability to accurately sense something’s relative velocity, low power consumption, and all-weather performance. Despite these advantages, radar has been a recent source of contention among the AV and ADAS developer community. Radar does not perform as well as other sensors when measured by traditional sensing FOMs, such as resolution and depth accuracy. The direct output of radars is often incompatible with many classical robotics detection algorithms, which makes it hard for many established firms to integrate with their systems. For these reasons, many AVs/ADASs use radar alongside sensor fusion or reject radar technology outright. This technology roadmap explores the benefits and opportunities to implement radars while achieving the goals of autonomous vehicles and mobile robots.

References

- https://www.radartutorial.eu

- https://www.researchgate.net/figure/Position-of-radar-on-a-robot-Radar-is-typically-located-at-a-height-hr-between-1m-and-3m_fig5_301273977

- https://man.fas.org/dod-101/navy/docs/es310/radarsys/radarsys.htm

Design Structure Matrix (DSM) Allocation

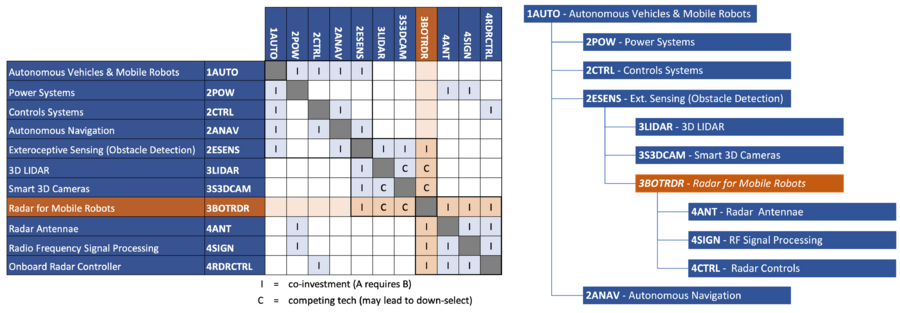

The DSM below shows the relationships between the 3RDRBOT technology roadmap and other roadmaps of interest. 3BOTRDR (radar for robotics & AVs) is a level 3 roadmap within the level 2 technology 2ESENS (exteroceptive sensing and obstacle detecting). The highest level initiative is 1AUTO: robotics & autonomous vehicles.

The roadmap hierarchy is shown to the right of the DSM from which it was extracted, below.

Roadmap Model using OPM

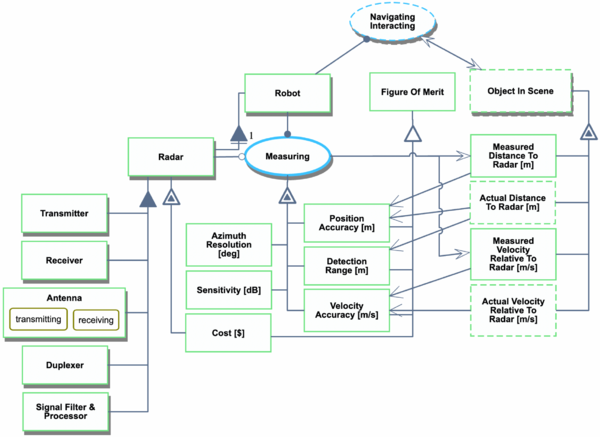

The Object-Process-Diagram (OPD) of the 3BOTRDR Radar for Robotics & AVs is provided in the figure below. This diagram captures the main object of the roadmap (Radar), its purpose, its various processes and instrument objects, and its characterization by Figures of Merit (FOMs).

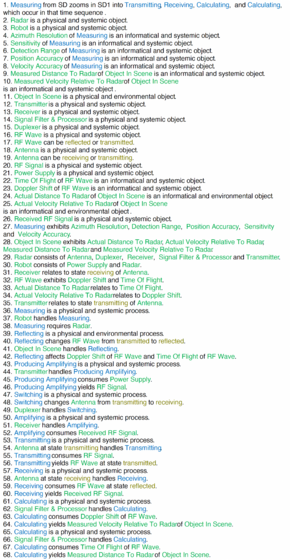

The autogenerated Object-Process-Language (OPL) is included (right) for clarity.

The "Measuring" Process is further detailed to show sub-processes and their instrument objects. Some objects, processes, and relationships are removed for clarity.

The autogenerated Object-Process-Language (OPL) is included (right) for clarity.