Earth Observation Satellites

Technology Roadmap Sections and Deliverables

Roadmap Overview

Design Structure Matrix (DSM) Allocation

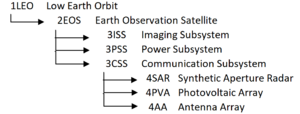

We have designated Earth Observation Satellites (2EOS) as a Level-2 Technology. It is present in the Level 1 Markets of 1) Low-Earth Orbital Technologies and Remote Sensing Technologies. For other examples of intersections on the basis of these markets, see the following figure:

Our Design Structure Matrix (DSM) is as follows:

Legend: Green: Direct Component (solar arrays are a component of the Power Subsystem) Yellow: Cross-relationships between technologies and other subsystems (heat pipes interact with all other subsystems to transfer heat) Orange: Physical interactions between Level four technologies within the same subsystem (solar arrays interact with any deployment and angling mechanisms)

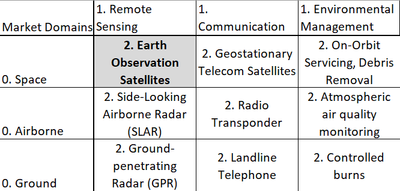

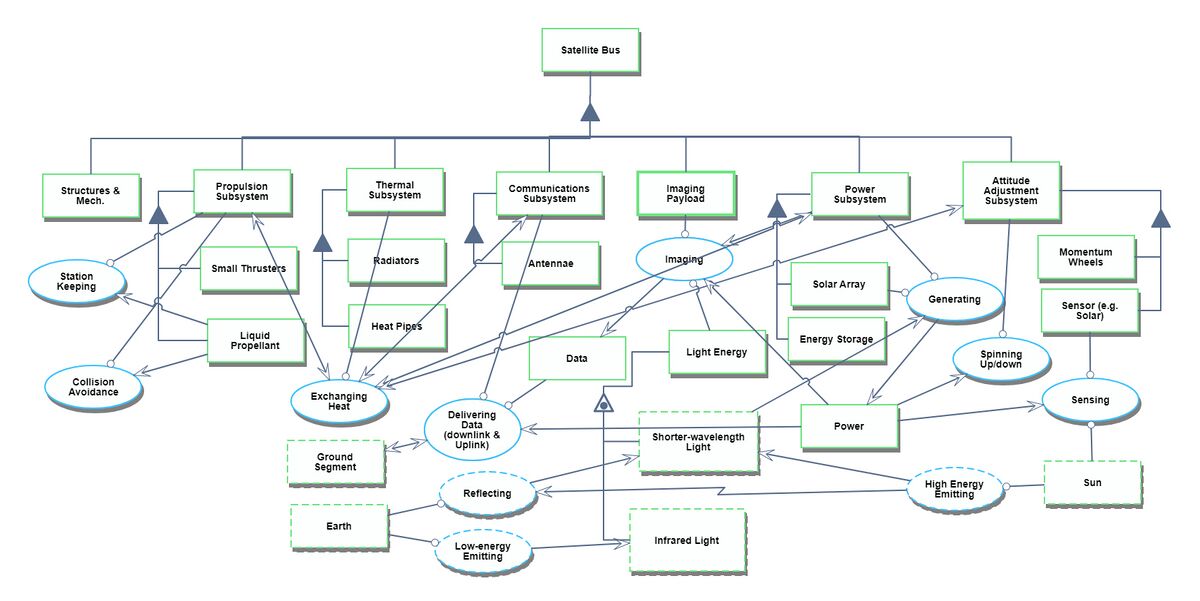

Roadmap Model using OPM

Our Object Process Diagram (OPD) is pictured below, complete with a Level Zoom to examine components of the Imaging Subsystem.

Figures of Merit (FOMs)

Several relevant Figures of Merit exist for Earth Observation Satellites. The imaging system in particular is constrained by four types of resolution:

- Spatial [m]

- Spectral [nm]

- Temporal [days] (also called revisit period)

- Radiometric [bits]

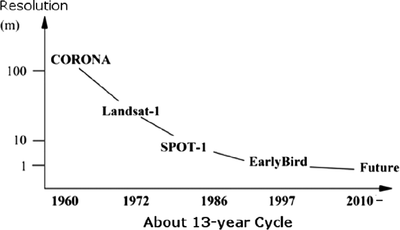

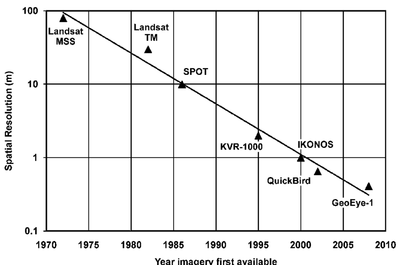

We will consider spatial resolution as principle among these, for most applications, including surveying, land management, urban planning, and disaster relief. Some hard tradeoffs exist between these. Generally, spatial resolution decreases with increasing spectral resolution. A minimum threshold of energy must reach the imaging sensor in order to resolve an image, and the smaller the band of electromagnetic radiation considered, the less energy received. Wider accepted bands (panchromatic) have more difficulty discerning color and material reflectivity, but gain in spatial resolution. Radiometric resolution is constrained primarily by cost and the available size for the imaging payload within the bus. The following figures show estimations of the improvement in spatial resolution over time.

(Left: Zhou 2010; Right: Fowler 2010)

(Left: Zhou 2010; Right: Fowler 2010)

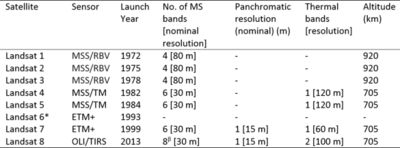

The 10x improvement in spatial resolution over a 13-15 year cycle suggests a decline rate in minimum resolvable distance (increase in resolution) of ~14% per year. Improvements in spectral resolution is evident in the increasing number of bands used in successive Landsat missions, with Landsat 1 carrying only 4 visible/near-visible bands, and the most recent Landsat 8 detecting 8 distinct visible/near-visible bands, one wide panchromatic band, and two long-wave thermal infrared bands.

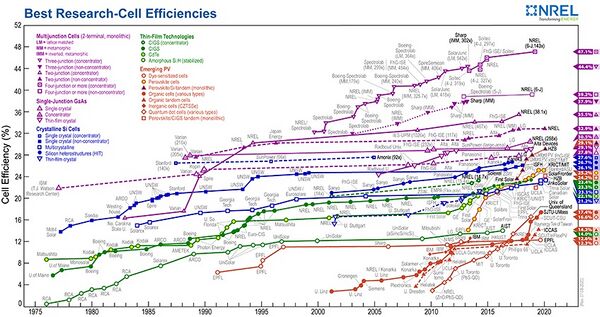

Other highest-relevance FOMs include the power draw of the imager relative to the power output of the solar arrays. If the power draw of the imager is too high, it may reduce the rate at which is it able to capture images, creating data gaps and lowering temporal resolution. A variety of quantitative measures exist to quantify this relationship, including the unitless fraction of generated power consumed by the imager, the unitless efficiency of the cells, or the power output per size and mass of the solar arrays [W/(m2*kg)], as smaller arrays cost less to install and launch, and thus more funds can be directed into the imaging subsystem. The improvement of solar cell power efficiency over time is shown below:

Finally, the bit error rate (BER) of the communications channel is a relevance Figure of Merit to satellites. If the BER is too high, it can either slow the system down by necessitating redundancy, or effectively reduce the spatial resolution by decreasing the confidence of any one pixel, necessitating spatial aggregation of the data in post-processing. The BER is a function of some systemic factors like chosen modulation scheme, but many extrasystemic variables, including interference from transmission medium, and as such no clear figure showing improvement over time has yet been identified.