Computer-Aided Detection Leveraging Machine Learning and Augmented Reality

Technology Roadmap Sections and Deliverables

- 2AIAR - Computer-Aided Detection Leveraging Machine Learning and Augmented Reality

Roadmap Overview

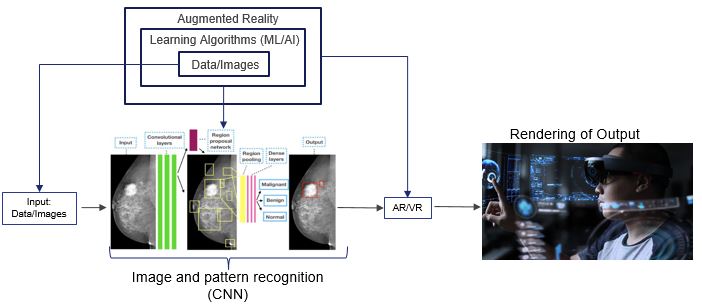

The working principle and architecture of CAD Leveraging Machine Learning and Augmented Reality is depicted in the below.

This technology uses Computer Aided Detection (CAD) with Faster R-CNN deep learning model, leveraging Augmented Reality (AR) for a unique 3D rendering experience. This experience increases the accuracy of interpretation and therefore, proper actions for problem solving, versus what is available today, which is the simple CAD using high resolution image processing. Faster R-CNN9 is based on a convolutional neural network with additional components for detecting, localizing and classifying objects in an image. Faster R-CNN has a branch of convolutional layers, called Region Proposal Network (RPN), on top of the last convolutional layer of the original network, which is trained to detect and localize objects on the image, regardless of the class of the object. The differentiation of this model is how it optimizes both the object detection and classifier part of the model at the same time.

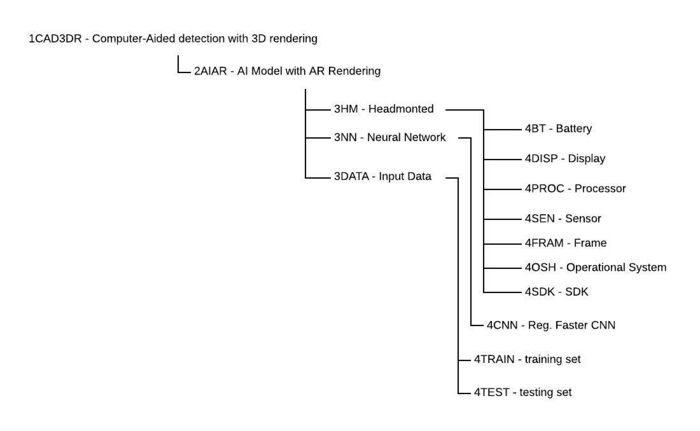

Design Structure Matrix (DSM) Allocation

The 2-AIAR tree that we can extract from the DSM above shows us that the AI Model for AR Rendering has the objective of bringing two emerging technologies to complement each other and delivery disruptive value. Both integrate to DETECT and RENDER images or interactive content using subsystem levels:

3HM head-mounted components, 3NN Neural Network algorithms, and 3DATA. In turn these require enabling technologies at level 4, the technology component level: 4CNN as the level of layer used for the neural network algorithm, 4TRAIN which is the data set to train the model and 4TEST for testing it.

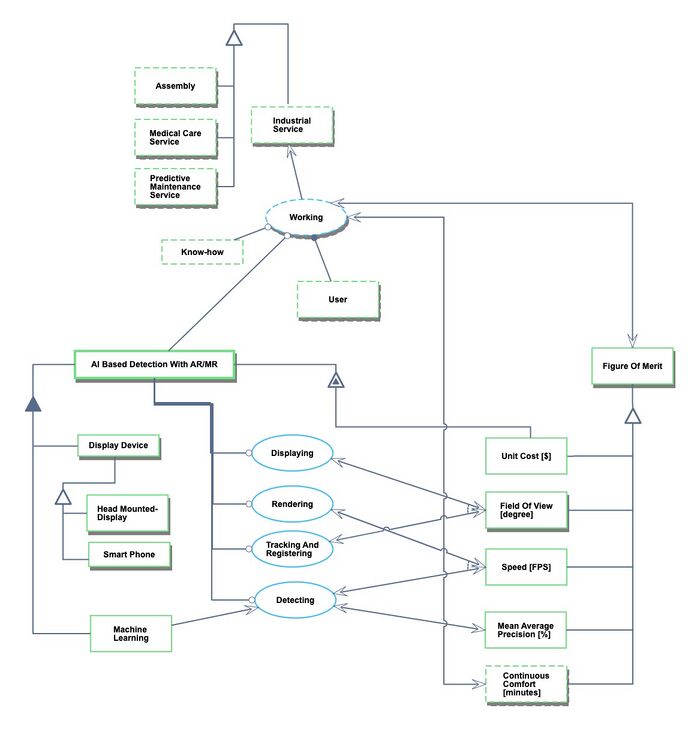

Roadmap Model using OPM

We provide an Object-Process-Diagram (OPD) of the 2CADAR roadmap in the figure below. This diagram captures the main object of the roadmap (AI and AR), its decomposition into subsystems (i.e. display devices such as head-mounted and smartphones, algorithms and models), its characterization by Figures of Merit (FOMs) as well as the main processes (Detecting, Rendering).

An Object-Process-Language (OPL) description of the roadmap scope is auto-generated and given below. It reflects the same content as the previous figure, but in a formal natural language.

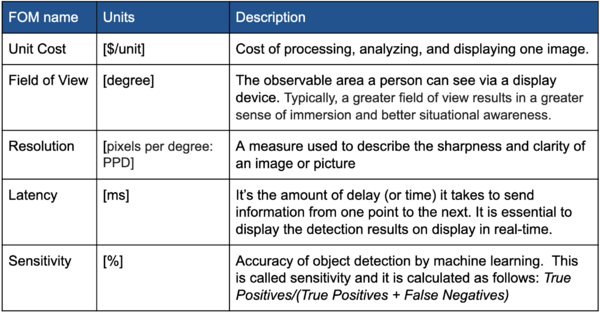

Figures of Merit

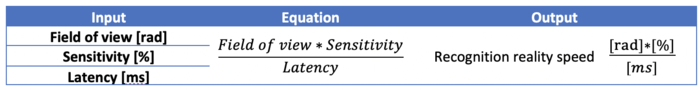

The table below shows a list of FOMs by which CAD with Machine Learning and AR can be assessed. The first FOM applies to the integrated solution of CAD with AI and AR. That cost per unit represents the combined utility of both functions: detecting (ML/AI) and rendering (AR). The other two FOMs are specifically for the Augmented Reality: Field of View and Resolution. The last two FOMs are for the Machine Learning model: Sensitivity (related to accuracy) and Latency.

The table below contain an FOM to track over time, as it uses the three of the primary FOMs that are the underlined of the technology. The purpose behind is to track accuracy, speed, and field of view, which is important for the overall user experience.